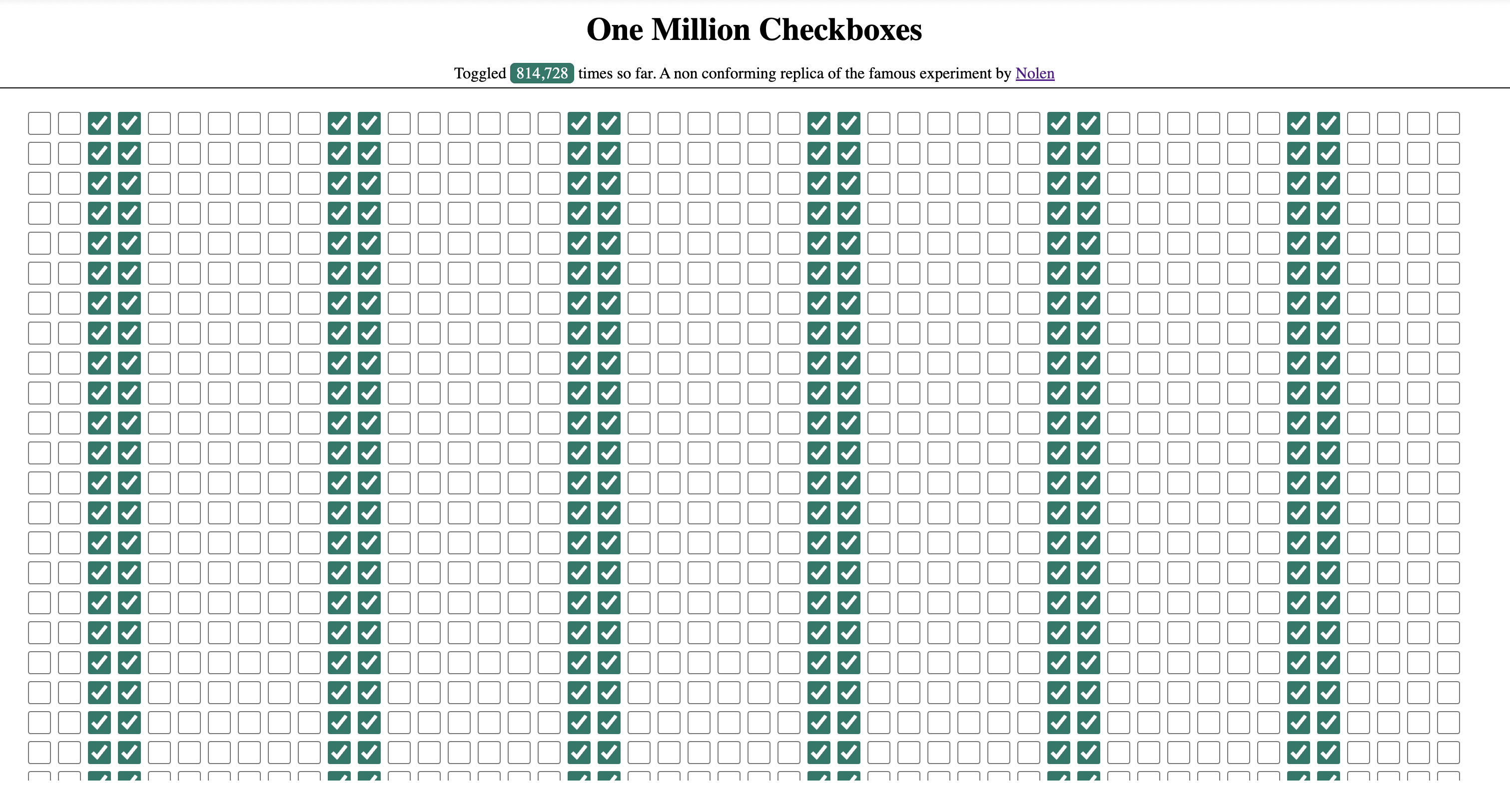

I first found out about the One million checkboxes website from a video on Prime’s youtube channel. I’ll admit that’s how I find about a lot of tech news these days. Ever since I found out about it I wanted to build it. I have recently started working on Go and wanted to build it directly in go instead of starting with python and then moving to go.

While building this I learned two important things

- It’s much easier to build something when someone has already made all the mistakes

- There are still some challenging things especially when you are working with web sockets and binary data for the first time

My only rule for this exercise was that I am not going to look at the code from the original repo.

The first order of business was to choose a websocket library for go. My first thought was to use gorilla/websocket, but the official net/websocket package has a note recommending coder/websocket. That’s what I went with.

Creating a basic websocket endpoint using coder/websocket is quite simple.

// Skipped error handling for brevity

http.HandlerFunc(func (w http.ResponseWriter, r *http.Request) {

c, _ := websocket.Accept(w, r, nil)

defer c.CloseNow()

ctx, cancel := context.WithTimeout(context.Background(), time.Second*10)

defer cancel()

var v interface{}

wsjson.Read(ctx, c, &v)

log.Printf("received: %v", v)

c.Close(websocket.StatusNormalClosure, "")

})

After trying out a hello world of sorts, I was ready to implement first version of the backend. The chat server example proved to be a good starting point for that.

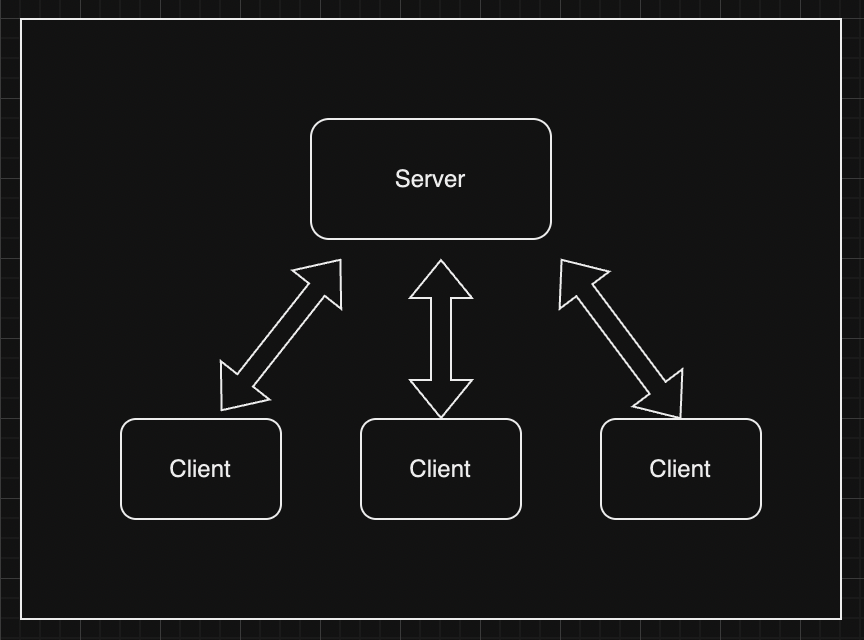

I did not want to add Redis just yet. For the first version I created a server that would accept websocket connections and forward them to all connected clients.

The server did not maintain any state and any new clients would start with a blank slate. The main data structure was the EventServer struct.

type eventServer struct {

// ...

// Some fields omitted for brevity

// clientsMu controlls access to the subscribers map

clientsMu sync.Mutex

// clients stores a map of all connected clients

clients map[*client]struct{}

// serveMux routes the various endpoints to the appropriate handler.

serveMux http.ServeMux

}

It stores a map of all the connected clients. Whenever a client starts a websocket connection on /subscribe the event server creates a client and adds it to the map.

The client is even simpler. It has a channel called events and any events sent to this channel are forwarded to the connected client.

// client represents a connected client.

// Events are sent on the events channel.

type client struct {

// events sent on this channel are sent to the websocket.

events chan []byte

// sendEvents controls whether the clients wants to recieve events or

// just send events.

// Used for creating bots that can send a lot of events to mimic traffic

sendEvents bool

}

The sendEvents flag is used to create write only bot-clients which will be useful later.

When a browser sends starts a connection the eventServer.subcribe() method is called. This method create a new client adds it to the clients map and starts 2 goroutines for reading and writing to this websocket

func (es *eventServer) subscribe(w http.ResponseWriter, r *http.Request) error {

c := &client{

events: make(chan []byte, es.eventBufferLen),

sendEvents: true,

}

es.addClient(c)

defer es.deleteClient(c)

conn, err := websocket.Accept(w, r, &websocket.AcceptOptions{

OriginPatterns: []string{"*"},

})

// Omitted error handling

defer conn.CloseNow()

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

// Start reading from and writing to the websocket

go c.startReadLoop(es, conn, ctx)

err = c.startWriteLoop(conn, ctx)

return err

}

The read loop read incoming messages and adds it to the events channel of all connected clients. The write loop waits for messages on the events channel and writes it to the websocket.

At this stage, this seperation does not offer a lot of benefit. But this becomes useful when we add in redis pub sub.

I wanted to use binary instead of json for the event messages to reduce the payload size. Each message is of 5 bytes.

action := 1

id := 1235

msg := make([]byte, 5)

msg[0] = byte(action)

msg[1] = byte(id >> 24)

msg[2] = byte(id >> 16)

msg[3] = byte(id >> 8)

msg[4] = byte(id)

The first byte is used to represent tha action, 1 for check and 2 for uncheck. The next 4 bytes are used to represent the id of the checkbox. This encoding is called big-endian which means the MSB is stored first. You can read more about it here. Understanding Endianness with Go

For the client I went with Nolen’s original choice of react-window. It has been a while since I worked on react. When I used to work on it the recommended way to create new react apps was npx create-react-app. It looks like the recommendation since then has changed to use Next.js. I had no intention of using a full fledged framework. So, instead I created a plain old html page and added react to it. I used Parcel as a bundler. More on the here, Adding React to an HTML Website

I created a grid component using the FixedSizeGrid component. The state was stored in a boolean array of size 1,000,00.

export const Grid = ({ checkHandler, bits }) => {

const totalCount = 1_000_000;

const cellHeight = 30;

const cellWidth = 30;

const { width, height } = useWindowSize();

const gridHeight = height - 150;

const gridWidth = width - 50;

const columnCount = Math.floor(gridWidth / 30);

const cellConfig = { columnCount, checkHandler, bits };

return (

<FixedSizeGrid

columnCount={columnCount}

rowCount={Math.ceil(totalCount / columnCount)}

columnWidth={cellWidth}

rowHeight={cellHeight}

height={gridHeight}

width={gridWidth}

itemData={cellConfig}

>

{Cell}

</FixedSizeGrid>

);

};

I couldn’t find a way to display exactly 1,000,000 million checkboxes in the FixedSizeGrid component. So I added a temporary (now permanent) hack to just hide checkboxes beyond 1 million.

The cell component uses the data prop passed down from FixedSizeGrid to render the state and handle the change events

export const Cell = ({ columnIndex, rowIndex, style, data }) => {

const id = rowIndex * data.columnCount + columnIndex;

if (id > 999_999) {

return <></>;

}

const styles = {

cells: {

// Styles

},

};

return (

<div style={styles.cell}>

<input

type="checkbox"

id={id}

title={"Checkbox " + id}

style={styles.checkbox}

checked={data.bits[id]}

onChange={data.checkHandler}

/>

</div>

);

};

For websocket connection I used react-web-socket.

const WS_URL = `${BASE_WS_URL}/subscribe`;

const { sendMessage, lastMessage, readyState } = useWebSocket(WS_URL, {

share: false,

shouldReconnect: (closeEvent) => {

return closeEvent.code !== 1000;

},

});

// Run when a new WebSocket message is received (lastMessage)

useEffect(() => {

handleServerEvents(lastMessage, setBits);

}, [lastMessage]);

The handleServerEvents() function is probably the only (slightly) complex function in the frontend. It reads the data as a blob and converts it into an array buffer.

export const handleServerEvents = (message, setState) => {

if (message === null) {

return;

}

const blob = message.data;

if (!(blob instanceof Blob)) {

console.error("Invalid message: Expected Blob but received something else");

return;

}

blob

.arrayBuffer()

.then((buffer) => {

processArrayBuffer(buffer);

})

.catch((error) => {

console.error("Failed to read Blob:", error);

});

const processArrayBuffer = (buffer) => {

// Defined in the next section.

};

};

The server sends the events in batches. The processArrayBuffer() method reads each event from the batch and applies the update to the state. I have taken the liberty to assume a fixed event size of 5 for the sake of simplity. It uses the first byte of an event as the action, 0 for uncheck, 1 for check and the next 4 bytes as the id in big endian format.

const processArrayBuffer = (buffer) => {

// Create a DataView to read the binary data

const view = new DataView(buffer);

// Validate the message length

if (view.byteLength % 5 !== 0) {

console.error(

`Invalid message: Expected sets of 5 bytes, but got ${view.byteLength}`

);

return;

}

setState((prevState) => {

const updatedState = [...prevState];

for (let i = 0; i < view.byteLength / 5; i++) {

// Read the first byte as the action

// 0 for "uncheck", 1 for "check"

const action = view.getUint8(i * 5);

// Read the next 4 bytes as the ID (32-bit integer)

const id = view.getUint32(i * 5 + 1, false);

if (action === 1 || action === 0) {

updatedState[id] = action === 1 ? true : false;

}

}

return updatedState;

});

};

The checkboxToggleHandler() was triggered on checkbox change. It updated the state and sent the event to the connected websocket. If the websocket wasn’t connected yet, I just dropped the event. The events are sent in the same structure as they are recieved. It creates an empty array buffer of size 5, adds the action, the id and sends it off.

let checkboxToggleHandler = (e) => {

if (readyState !== ReadyState.OPEN) {

// @todo: Save event for retrying.

console.log("Websocket connection is not open yet");

return;

}

const checkbox = e.target;

const id = parseInt(checkbox.id);

setBits((prevState) => {

const updatedState = [...prevState];

updatedState[id] = checkbox.checked;

return updatedState;

});

const action = checkbox.checked ? 1 : 0;

const buffer = new ArrayBuffer(5);

const view = new DataView(buffer);

// Set the action byte

// (0 for uncheck, 1 for check)

view.setUint8(0, action);

// Sent using big-endian byte order

view.setUint32(1, id);

sendMessage(buffer);

};

With this I had the first version of the page working. Any connected clients would see the updates from everyone else.

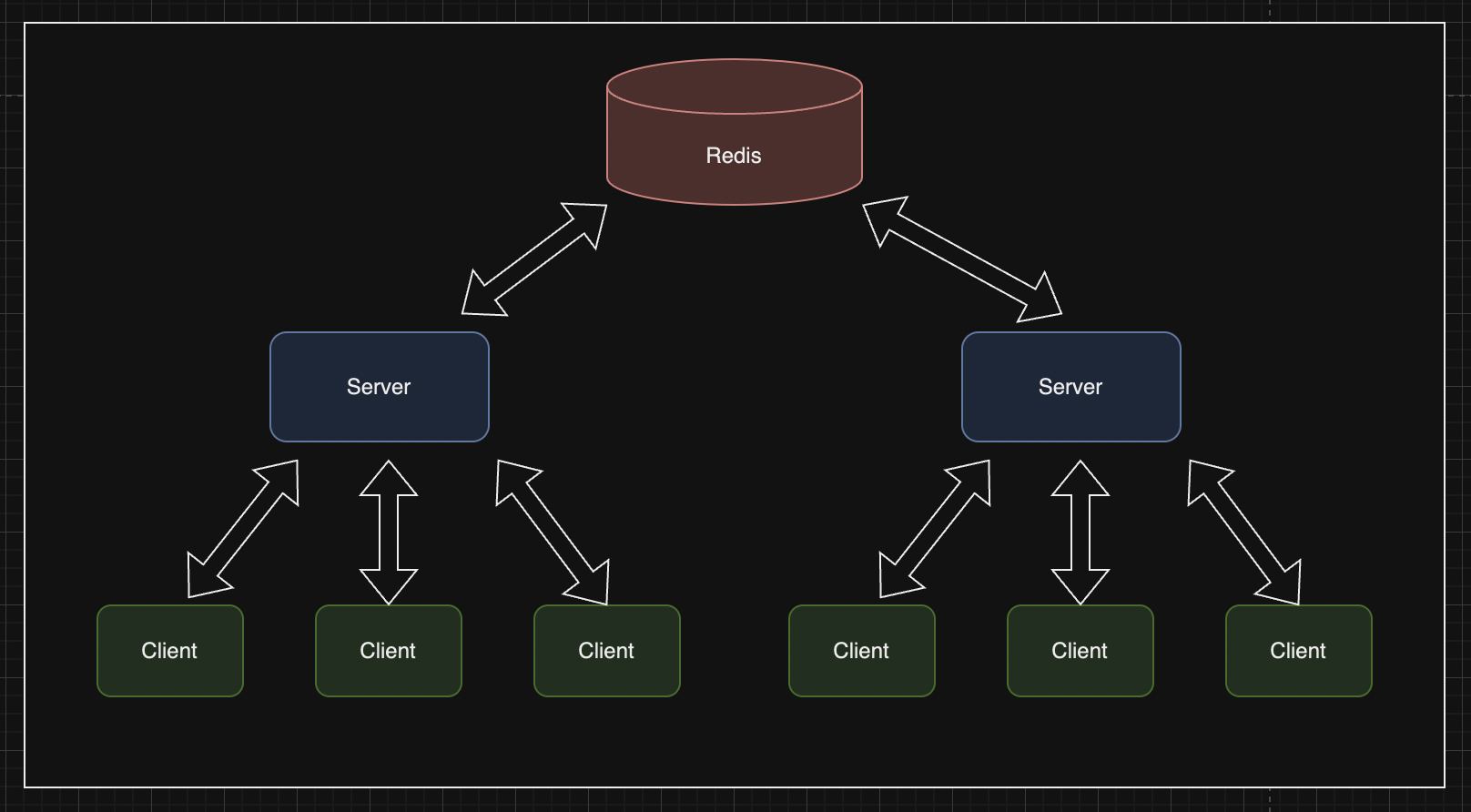

But they had no way of getting the intial state and so everyone saw a different view. The next step was to maintain the state in the backend. On page load the clients would fetch the inital state and everyone would be happy. We could store the state in memory. But that would make it difficult to load balance across multiple servers if need be.

So, just like the original version I went with Redis to store and relay the state between clients.

I didn’t actually try adding multiple servers. So, I am sure there would be some challenges in getting it to work. But it should work in principle.

The entire state is stored in a redis string, initialized to all zeros. I created an init method that would check if the state key exists and create string with a million zeros.

func (rc *RedisClient) init(key string) {

ctx := context.Background()

_, err1 := rc.client.Get(ctx, key).Result()

if err1 == nil {

fmt.Printf("Key: %s already set \n", key)

return

}

bitString := strings.Repeat("0", 1_000_000)

err := rc.client.Set(ctx, key, bitString, 0).Err()

if err != nil {

panic(err)

}

}

But this approach had a bug that bit me right away. Some of the checkboxes were checked by default. And it didn’t seem random. There was a pattern to it.

I tried looking at the value stored in the key. It was all zeros.

127.0.0.1:6379> GETRANGE bitCache 0 100

"00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000"

But, when I tried getting a specific bit it was turned on.

127.0.0.1:6379> getbit bitCache 10

(integer) 1

A few quick searches later, I realized that I dont want a string of a million zeros. I want a string of a million bits. A zero in binary is

0 0 1 1 0 0 0 0

And that caused the patterned bug above. After updating the logic to set the bits to 0, everything was fine. For now.

Another optimization was possible because redis automagically grows the string to fit the specified bit. The added bits are set to 0. So all we need to do is to set the bit at index 999,999 to 0 and all preceeding bits will automagically be set to 0.

func (rc *RedisClient) init(key string) {

ctx := context.Background()

_, err1 := rc.client.Get(ctx, key).Result()

if err1 == nil {

fmt.Printf("Key: %s already set \n", key)

return

}

err := rc.client.SetBit(ctx, key, 1_000_000-1, 0).Err()

if err != nil {

panic(err)

}

val, err := rc.client.Get(ctx, key).Result()

if err != nil {

fmt.Printf("could not get key: %v\n", err)

}

fmt.Printf("Len of the stored value: %d\n", len(val))

}

Inital state load

When a new client would connect it would call an API to fetch the current state. This API would simply read the key from redis and pass it over as “application/octet-stream”.

// getStateHandler handles the requests to the GET /state route

// It returns the state of all the checkboxes stored in the cache

func (es *eventServer) getStateHandler(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Access-Control-Allow-Origin", es.originPatterns)

if r.Method != "GET" {

http.Error(w, http.StatusText(http.StatusMethodNotAllowed), http.StatusMethodNotAllowed)

return

}

val, err := es.cacheClient.get(key)

if err != nil {

es.logf("%v", err)

}

w.Header().Set("Content-Type", "application/octet-stream")

fmt.Fprint(w, val)

}

Last updated - 6th Dec 2024

[To be continued…]